We just bought a new SAN and I am in the midst of migrating our SQL Database Instances to it. One of the challenges I am facing is that I have several very large tables (billions of rows) and some of them contain LOB data. So, simply dropping the non-clustered indexes and then recreating the clustered index online is not an option, and, of course, all of the systems have to remain online during the migration. I certainly cannot get a downtime long enough (hours) to move some of my largest tables. So, the challenge I’ve been facing, is how to move these tables with no (or at least minimal) downtime, and preferably with very little impact to production performance.

Well, I have one thing really going for me – I already have my filegroups setup exactly the way I want them. My partitions are stretched across all the right filegroups, my tables are separated off into certain groups according to various logic on each database, and I’m not really looking to change anything like that; at least not with this migration. So, ultimately, I just need to figure out how to move each filegroup to the new array, without interrupting anything.

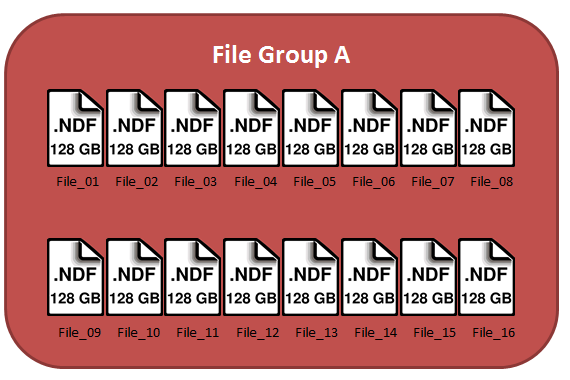

So, let’s look at one of my filegroups: it consists of 16 files, each of size 128GB, for a total size of 2TB. Like so:

Now, my original idea was to create 16 new files in the same filegroup but on the new array and then empty and remove the old files one by one, until this filegroup was completely on the new SAN. While, this would technically work, it causes a huge problem that I learned about from one of Paul Randal’s (Blog | Twitter) lessons during the SQLskills Immersion Event 1: Internals and Performance. Paul also discusses the problem in a SQL Server Pro article from August 2011. Basically, the problem is that due to the “proportional fill” method of writing to datafiles in a filegroup, you will cause your files to be filled unevenly, resulting in a hot-spot at whichever file(s) have the most freespace at the end of your process.

Now, my original idea was to create 16 new files in the same filegroup but on the new array and then empty and remove the old files one by one, until this filegroup was completely on the new SAN. While, this would technically work, it causes a huge problem that I learned about from one of Paul Randal’s (Blog | Twitter) lessons during the SQLskills Immersion Event 1: Internals and Performance. Paul also discusses the problem in a SQL Server Pro article from August 2011. Basically, the problem is that due to the “proportional fill” method of writing to datafiles in a filegroup, you will cause your files to be filled unevenly, resulting in a hot-spot at whichever file(s) have the most freespace at the end of your process.

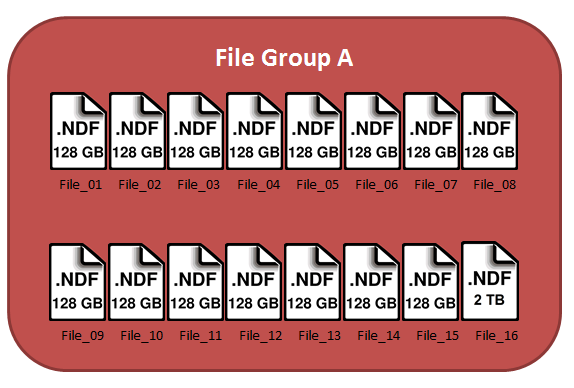

So, to avoid this in my environment, I decided to first, grow one of the files in my filegroup to a large enough size to hold all of the data in that filegroup. So, assuming that each of my files in this filegroup are almost full, I will grow File_16 to 2 TB.

Now, my plan is to empty and remove each file in the filegroup, except File_16. This will cause all of the data to be moved down to this one file. The actual emptying of each file has the potential to be very intensive, however, I have found minimal effects on my servers when doing this. This process can take a very long time, but since I have to move these datafiles online, this is the best way I have found in this sort of scenario. Test this first in a non-production environment. I would write and issue a script similar to this:

Now, my plan is to empty and remove each file in the filegroup, except File_16. This will cause all of the data to be moved down to this one file. The actual emptying of each file has the potential to be very intensive, however, I have found minimal effects on my servers when doing this. This process can take a very long time, but since I have to move these datafiles online, this is the best way I have found in this sort of scenario. Test this first in a non-production environment. I would write and issue a script similar to this:

DBCC SHRINKFILE(File_01, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_01] DBCC SHRINKFILE(File_02, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_02] DBCC SHRINKFILE(File_03, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_03] DBCC SHRINKFILE(File_04, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_04] DBCC SHRINKFILE(File_05, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_05] DBCC SHRINKFILE(File_06, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_06] DBCC SHRINKFILE(File_07, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_07] DBCC SHRINKFILE(File_08, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_08] DBCC SHRINKFILE(File_09, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_09] DBCC SHRINKFILE(File_10, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_10] DBCC SHRINKFILE(File_11, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_11] DBCC SHRINKFILE(File_12, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_12] DBCC SHRINKFILE(File_13, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_13] DBCC SHRINKFILE(File_14, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_14] DBCC SHRINKFILE(File_15, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_15] |

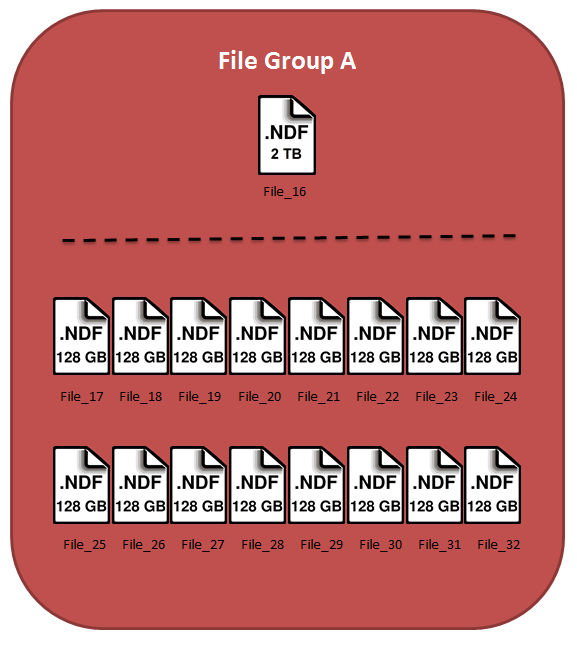

At this point, I add the 16 new files onto the new SAN, and we end up with one large file on the old SAN and 16 new files on the new SAN:

Now, all there is left to do is issue one more empty command to stripe that 2TB of data amongst the 16 new files.

Now, all there is left to do is issue one more empty command to stripe that 2TB of data amongst the 16 new files.

DBCC SHRINKFILE(File_16, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_16] |

After this command has finished, the data will be nearly perfectly striped across all 16 files, on the new SAN. And this was accomplished with no downtime and, from my experience, very little impact to production performance. Your particular environment may see more performance impact, so please test in a non-production environment before trying this with a live system.

Hey.. I have a question.. I believe the scenerio you mentioned is for databases with only one filegroup. I was wondering how do we achive this if there are multiple filegroups and only of the filegroups has to be moved to new san?

Is it that we will have to manually move the clustered and non clustered indexes to the new filegrup added on the new san?

Ashwin, I know you asked for me to ignore your comment, but I just wanted to clarify for the sake of the comment. This method works regardless of the number of filegroups you are dealing with, because you are only dealing with one filegroup at a time. You could repeat as necessary for each filegroup you want to move. Also, the benefit of this method is that you do not have to rebuild/move any indexes at all – which would be an offline operation for indexes including LOB data in SQL Server 2008.

Awsome post. Thanks for the great tip.

Hi,I have a question..

in the post:

Basically, the problem is that due to the “proportional fill” method of writing to datafiles in a filegroup, you will cause your files to be filled unevenly, resulting in a hot-spot at whichever file(s) have the most freespace at the end of your process.

But I think, since the new 16 datafiles are all empty, they will be filled with data by shrinking old datafiles, always with the same rate. So create new datafiles at first, and shrink old ones one by one, will also make the data distribute evenly. Am I right?

Thanks for the tip, it saved me a lot of time. The only thing to remember is to have enough space for log growth.

I’m glad to hear that it helped you out! And yes, good point about the log space.