I had to whip this up to add to the evil Meme Noise. Enjoy, or Sorry, depending on your reaction:

Dealing with Big Data – T-SQL Tuesday #027

As part of T-SQL Tuesday, I wanted to discuss one of the experiences I have had with dealing with Big Data in SQL.

Over the course of the last few years, our Development group has created a new flagship product that our entire enterprise is now using for day-to-day activities. It is a system that allows the business users to have direct power over the way their data is calculated and manipulated without us having to make direct changes to compiled code or database procedures. As a result, we had to create an extremely abstracted data model, in which no data can really be grouped by any kind of “business” idea, and only by general types of data, such as “inputs”, “calculated data”, and “configuration”. This is all fed into our system from various sources, recorded, versioned, calculated, and reported out through various methods.

It is also a requirement that we keep every version of any data we receive or calculate, as well as every version of the “equations” our business users have created, referencing these data. This enables them to better explain and modify business decisions based on the data available at the time that operations made the decisions they did. It also makes audits and compliance checking completely traceable and transparent.

As you may realize, this makes for an enormously complex, but flexible, and powerful, system. It also creates a very quickly growing amount of data. This system has only been in production for 13 months so far, and we already have added 3.2 TB of data (with about 6 billion rows in our largest table) and the growth-rate is increasing as we incorporate more areas of business into this model. We are currently experiencing a growth of over 350GB per month in our production database and we are expecting this to continue to expand to over 500 GB per month by the end of the year. Due to the nature of our industry, the data is continually resettled by the market and this is done heavily for the first 6 months. So, the data for 6 months ago will be at least 4 times as large as the data that is processed today. This is, by no means, the largest database or the largest growth rates out there, but for us, it’s a huge jump from our legacy systems.

So, we have had to rethink the way we address this data. Our old ways of optimizing and handling the database didn’t translate 1:1 to this new VLDB. This manifested specifically in one particular design consideration, early on in development.

When I was still developing the database, we were only receiving 5 to 10 GB of data per day in inputs and calcualated data, however, I knew this was still the early stages and that the amount of data was going to grow significantly and I wanted to be ready before we got there. So, I started testing partitioning schemes to divide up the data, to make the data easily queried, but still seperated enough.

Initially, I tried to create a variation of the sliding window scenario, based on months in the future, dividing out into weeks in the future, dividing out into days for the days surrounding “today”, collapsing back into weeks and months in the past. The scenario was very complex to setup, but I got the management script created and it was working, but it caused an issue on the switch-out, in which data was unavailable for too long while trying to collapse the weeks into months. With our resettlement timeline, this was unacceptable. So, I called Kimberly Tripp (Blog | Twitter), the partitioning expert.

After a conversation about what I was trying to accomplish, she suggested that I stop trying to do a rolling window scenario for this particular case. Instead, since I wanted the granularity of a single day, go ahead and partition at a day, but create a single table for each year, and then wrap up those years using a partitioned view. I implemented a variation of this, based on creating a BIGINT that contains both the day in question and an indicator for which market I was dealing with (so that I could have my data split between markets as well).

This has proven to be a very successful method that has kept database round-trips, consisting of 7 to 12 million rows each, very quick and completely within the scope of our users’ needs. It has also made my partition maintenance very simple. I only have to add another year out into the future once a year, to keep the machine rolling. And, once my data has gotten to a place where the business users can allow me to archive off old data, I’ll have a clear delineation upon which to divide my data. It has been a clear win for my situation and shows promise of scalability far beyond where we are even today.

Resolving Windows Authentication Annoyances using Klist

In my SQL environment, I manage all of my SQL Server rights by Windows Authentication, based upon Active Directory groups. This allows me to centralize my security administration into groups for specific types of users within my environment. I also get the benefit of my developers not ever accessing my databases with connection strings containing a clear-text password to my databases.

I have had two specific issues arising from managing my security in this way. One of my problems is that this allows my domain administrators to have some level of control over who might have access to a database. I have, on at least two occasions, had an over-zealous admin assign rights to a user “because the user asked for it” without my knowledge. I have already posted my solution to this problem in my post, Monitoring Active Directory Groups for Windows Authentication in SQL Server Using PowerShell. In this post, I would like to discuss my other issue, propagating changes in Active Directory quickly.

When I first started using Windows Authentication for my SQL Servers, based upon Active Directory groups, I would notice that I would add a user to a group in Active Directory and it would take a long time before the user was actually able to use the rights; sometimes they even had to reboot. This led to a lot of frustration on both my side and the users’. It even caused us to jump back to SQL Authentication on some time-sensitive rollouts, because we had no idea why the Active Directory rights were “not working”. So, after a few embarrassing episodes, I decided to dig into why this was happening.

To me, it made no sense why a Microsoft core enterprise system (SQL Server) talking to another Microsoft core enterprise system (Active Directory) couldn’t figure out that new permissions had been granted to a user’s group. So, I dug a little into how Kerberos works and found that the group membership is passed in the Kerberos ticket, not just a reference back to Active Directory (which I had thought). So, this meant that when a ticket was granted to a user’s session, the groups that the particular user was a member of were held until either the ticket was cleared or expired.

It can be useful to see what tickets you have open, which you can do by typing “klist” into a command prompt. This, however, only lets you see your current tickets.

So, that led me to looking into what actions cause your Kerberos tickets to regenerate. Remember that little message bubble that shows up in one of your sessions right after you have changed your password?

When you lock and unlock your computer, you are causing Windows to request new Kerberos tickets. This also explains why rebooting a user’s machine caused the rights to start working, even though it was complete overkill.

When you lock and unlock your computer, you are causing Windows to request new Kerberos tickets. This also explains why rebooting a user’s machine caused the rights to start working, even though it was complete overkill.

Another way to force Windows to request new Kerberos tickets is to run “klist purge” from the command prompt. This explicitly asks Windows to dump your currently Kerberos tickets and thus, request new ones.

Although this is a simple problem, solving it finally relieved a nagging headache I had experienced from time to time.

Monitoring Virtual Log File Density in SQL Server

The SQL Server Transaction Log is comprised of Virtual Log Files (VLFs), which vary in size, depending on how you have grown your Transaction Log over time. Each transaction that occurs on your SQL Server instance is logged to the Transaction Log prior to being hardened to your data files. SQL Server will start writing to one VLF and continue to move to the next VLF as it progresses through the Transaction Log. This is how SQL Server maintains the “C” in ACID; that is “Consistency”. There is much more to be said with regard to the Transaction Log, but I will not go into the details in this post.

If a transaction is running for a very long time, or if a log backup has not been taken in a long while, or if a VLF cannot be cleared for any other reason (a SQL mirror might be down), the number of VLFs that are available will start to diminish. If the Transaction Log ever runs out of available VLFs, it will either have to grow the Transaction Log, or your database will have to stop. Due to the nature of SQL Server, the database cannot remain available to users without available space to record transactions; again, due to maintaining ACIDity. Neither eventuality is optimal: you do not want your database to become unavailable, nor would you like your Transaction Log to grow (if you can avoid it). When a Transaction Log grows, it must zero-out the entirety of the added diskspace (even if you have Instant File Initialization configured properly).

To avoid these sorts of scenarios, I monitor the density of my active VLFs, to try to proactively handle any situation that might arise, before it becomes a crisis. Below, I will provide the script that I use to check each Transaction Log in the instance.

This script runs “DBCC LogInfo” against each database in the instance, records the number of VLFs, how many are active, and how many are available, then calculates the density as a percentage, and displays the results:

DECLARE @databaseList TABLE ( [database] VARCHAR(256), [executionOrder] INT IDENTITY(1,1) NOT NULL ) DECLARE @vlfDensity TABLE ( [server] VARCHAR(256), [database] VARCHAR(256), [density] DECIMAL(7,2), [unusedVLF] INT, [usedVLF] INT, [totalVLF] INT ) DECLARE @logInfoResult TABLE ( [FileId] INT NULL, [FileSize] BIGINT NULL, [StartOffset] BIGINT NULL, [FSeqNo] INT NULL, [Status] INT NULL, [Parity] TINYINT NULL, [CreateLSN] NUMERIC(25, 0) NULL ) DECLARE @currentDatabaseID INT, @maxDatabaseID INT, @dbName VARCHAR(256), @density DECIMAL(7,2), @unusedVLF INT, @usedVLF INT, @totalVLF INT INSERT INTO @databaseList ( [database] ) SELECT [name] FROM [sys].[sysdatabases] SELECT @currentDatabaseID = MIN([executionOrder]), @maxDatabaseID = MAX([executionOrder]) FROM @databaseList WHILE @currentDatabaseID <= @maxDatabaseID BEGIN SELECT @dbName = [database] FROM @databaseList WHERE [executionOrder] = @currentDatabaseID DELETE @logInfoResult FROM @logInfoResult INSERT INTO @logInfoResult EXEC('DBCC LOGINFO([' + @dbName + '])') SELECT @unusedVLF = COUNT(*) FROM @logInfoResult WHERE [Status] = 0 SELECT @usedVLF = COUNT(*) FROM @logInfoResult WHERE [Status] = 2 SELECT @totalVLF = COUNT(*) FROM @logInfoResult SELECT @density = CONVERT(DECIMAL(7,2),@usedVLF) / CONVERT(DECIMAL(7,2),@totalVLF) * 100 INSERT INTO @vlfDensity ( [server], [database], [density], [unusedVLF], [usedVLF], [totalVLF] ) VALUES ( @@SERVERNAME, @dbName, @density, @unusedVLF, @usedVLF, @totalVLF ) SET @currentDatabaseID = @currentDatabaseID + 1 END SELECT [server], [database], [density], [unusedVLF], [usedVLF], [totalVLF] FROM @vlfDensity ORDER BY [density] DESC |

I sometimes run this script across all of my instances using Red-Gate MultiScript to see how my VLF densities are doing across my entire enterprise. This is particularly useful when our DR site is down for some reason, it helps me keep track of which databases, if any, are in danger of having an impact due to VLFs being unable to clear.

Simple Walk-Through for Setting Up Oracle Data Guard

In most Enterprise-Class database environments, there is the very real need for maintaining a near-line, transactionally-consistent “mirror” of your production databases. This sort of technology goes beyond the benefits of a cluster, in that it can be part of a Disaster Recovery or Business Continuity plan, in which the entire primary data center is unavailable. Each RDBMS has a different set of tools to help the DBA provide this sort of solution. In Oracle, the tool I use is Data Guard.

Oracle Data Guard has been a feature in Oracle since 8i, but it has evolved over the years. I will be using the syntax and features available in Oracle 11g. As with almost every feature in Oracle, there are multiple ways to configure Data Guard and there are countless combinations of options that can be utilized at every step along the way. I do not intend to discuss exhaustively the whole of Data Guard. I will leave the idiosyncrasies for the online documentation or other blogs to do that. I simply want to present a simple way to get an 11g, +ASM-based, stand-alone, instance covered by a Data Guard Physical Standby on another server.

My initial environment consists of two Windows servers, one at my primary site, which I will name “Oracle”, and one at my secondary site, which I will name “OracleDR”. On each server, I have installed the grid components and a single instance of the database engine. I have configured ASM on each server, and two Disk Groups on each ASM instance, in my grid home on each server. On “Oracle”, the Disk Groups are called “PROD_DATA” and “PROD_RECOVERY”. On “OracleDR”, the Disk Groups are called “DR_DATA” and “DR_RECOVERY”. I also have configured each of my servers with a single Oracle Listener, in the grid home. My production Database Instance is named “TEXAS”, has a db_unique_name of “TEXASP”, is open, is in archivelog mode, and is serving users. “OracleDR” does not have any database instances at this point.

Please read through the entire walk-through before beginning your own Data Guard setup.

Preparing for the New Instance

First, take a fresh backup of the old database instance, using an RMAN script, similiar to this (starting from a command prompt) (11g users, see special note below, regarding skipping the backup):

CD O:\Oracle\11.2.0.2\TEXAS\BIN

SET ORACLE_HOME=O:\Oracle\11.2.0.2\TEXAS

SET ORACLE_SID=TEXAS

RMAN TARGET /

RUN {

ALLOCATE CHANNEL D1 TYPE DISK;

ALLOCATE CHANNEL D2 TYPE DISK;

ALLOCATE CHANNEL D3 TYPE DISK;

BACKUP AS COMPRESSED BACKUPSET FORMAT 'W:\TEXAS\df_t%t_s%s_p%p' DATABASE;

RELEASE CHANNEL D1;

RELEASE CHANNEL D2;

RELEASE CHANNEL D3;

}

EXIT

Next, create a standby controlfile, which will contain our fresh backup (assuming I am using my controlfile for cataloging RMAN):

SQLPLUS / AS SYSDBA ALTER DATABASE CREATE STANDBY CONTROLFILE AS 'W:\TEXAS\StandbyCTL.CTL'; EXIT

We are also going to need a copy of the production pfile, to create our new instance at the DR site:

SQLPLUS / AS SYSDBA CREATE PFILE='W:\TEXAS\initTEXASDR.ora' FROM SPFILE; EXIT

Now, I logon to “OracleDR”, make sure the same file structure (W:\TEXAS\) exists, and copy these files over to the DR server:

robocopy \\Oracle\W$\TEXAS W:\TEXAS

Creating the New Instance and Duplicating Production to DR

Now, we need to move that pfile to the TEXASDR home’s “database” directory and modify it to serve our DR instance. Change the following parameters:

- control_files=’W:\TEXAS\StandbyCTL.CTL’ (temporarily, while we setup the standby)

- db_create_file_dest=’+DR_DATA’

- db_create_onlinge_log_dest_1=’+DR_DATA’

- db_recovery_file_dest=’+DR_RECOVERY’

- log_archive_dest_1=’LOCATION=+DR_RECOVERY’

- db_unique_name=’TEXASDR’

- change diagnostic_dest (if different from the production server)

Next, it is time to create our DR database instance. use ORADIM on “OracleDR” to accomplish this:

CD O:\Oracle\11.2.0.2\TEXASDR\BIN SET ORACLE_HOME=O:\Oracle\11.2.0.2\TEXASDR SET ORACLE_SID=TEXASDR ORADIM -NEW -SID TEXASDR -INTPWD ***** -STARTMODE AUTO

We have to make sure we have connectivity both directions between the two instances. So, setup tnsnames.ora and listener.ora on both sides so they can see each other. You can verify this using TNSPING.

We should also copy the Password File from production to DR. The passwords must match on both instances.

We then startup the new instance in nomount mode and initiate a duplicate for standby:

SQLPLUS / as sysdba STARTUP NOMOUNT; EXIT RMAN TARGET /@TEXASP AUXILIARY /@TEXASDR DUPLICATE TARGET DATABASE FOR STANDBY;

If you are using 11g, take special note:

There is a new feature in Oracle 11g which allows you to skip the backup and control file portions of this and skip straight to creating the instance and doing a duplicate from the live production instance. The RMAN command used here would be:DUPLICATE TARGET DATABASE FOR STANDBY FROM ACTIVE DATABASE;

Cleanup and Standby Configuration

Once the duplicate has completed, we now have a physical standby instance at the DR site. We just need to do some final configuration on this new instance before we can turn on Data Guard.

We need to move that Control File to a more permanent location, and make a few copies of it. So, let’s get back into SQLPLUS and bounce the new Oracle instance and bring it back up in nomount mode:

CD O:\Oracle\11.2.0.2\TEXASDR\BIN SET ORACLE_HOME=O:\Oracle\11.2.0.2\TEXASDR SET ORACLE_SID=TEXASDR SQLPLUS / as sysdba SHUTDOWN IMMEDIATE: STARTUP NOMOUNT; EXIT; RMAN TARGET / RESTORE CONTROLFILE TO '+DR_DATA' FROM 'W:\TEXAS\StandbyCTL.CTL'; / (the / repeats the last command in the buffer, I'm doing it twice, for a total of three copies of the Control File) / EXIT

Now, head over to the grid home and use ASMCMD to check what names the RMAN RESTORE gave the Control Files:

CD O:\Oracle\11.2.0.2\grid\BIN SET ORACLE_HOME=O:\Oracle\11.2.0.2\grid SET ORACLE_SID=+ASM ASMCMD CD +DR_DATA/TEXASDR/CONTROLFILE LS EXIT

We take the three Control Files listed from the ASMCMD “LS” command and change the pfile control_files parameter to list all three of these locations (with the full ASM path).

Now we need to apply these changes, so get back into SQLPLUS, shutdown the instance, create an spfile, and startup the instance, this time in mount mode:

CD O:\Oracle\11.2.0.2\TEXASDR\BIN SET ORACLE_HOME=O:\Oracle\11.2.0.2\TEXASDR SET ORACLE_SID=TEXASDR SQLPLUS / as sysdba SHUTDOWN IMMEDIATE: CREATE SPFILE FROM PFILE; STARTUP MOUNT; EXIT

At this point, we technically have an instance ready for Data Guard, but there is one last issue we need to resolve so that our instance is ready for a switchover, should we ever need it in the future (which is the primary point of having the DR site in the first place). The outstanding issue is our standby logfiles. Each instance needs to have a set of standby logfiles that can be used to apply the incoming logs from the primary instance. Please note: when you switchover to your DR site, at some point, you will most likely want to apply the logs from the DR site back to the Primary site, before initiating a switch back to the Primary (to keep the data in sync), so, you will need to create these standby logfiles on the Primary instance as well. You must always have at least the same number of standby log files as the other side has online logs, plus one, and the standby log files must be at least as large as the online log files. For instance, if your Primary site has three online log files, you will need four standby log files on the DR instance (as well as four standby log files on the Primary instance, to cover the DR online log files).

So, let’s execute this sort of code on both sides, to create the necessary standby logs:

SQLPLUS / as sysdba ALTER SYSTEM SET STANDBY_FILE_MANAGEMENT='MANUAL'; (you will most likely have to execute this command to create the new files, I recommend changing it to 'AUTO' when you are done) SELECT * FROM V$LOG; (to verify how many online logs we have - let's assume 3, each 52428800 bytes) ALTER DATABASE ADD STANDBY LOGFILE SIZE 52428800; / / / (again, we are creating four standby log files to cover the online log files, plus one) SELECT * FROM V$STANDBY_LOG; (to verify they were created as you expected) EXIT

Now, we are ready to turn on managed standby (this is activating Data Guard):

SQLPLUS / as sysdba ALTER DATABASE RECOVER MANAGED STANDBY DATABASE USING CURRENT LOGFILE DISCONNECT FROM SESSION; EXIT

Sync-Up the DR Instance with Production

Depending on several factors (how long the DUPLICATE took, how often you take archive log backups, so forth), there is a good chance that your Production system has moved on and your Standby is several sequences behind. To proceed with the last step, it is not necessary that all of your archive logs be applied to your standby, but the standby must be at least to the point of your last archive log backup. This is because the Primary can send over any sequences that are missing at the Standby, but only up to the point that it has readily on hand.

So, we are going to have to move your most recent archive log backups to the DR site, catalog them into the Standby instance, and restore them to the Standby so that Data Guard can apply them. We’ll go through this step-by-step, but first, let’s see what sequence your Standby instance is waiting on:

SQLPLUS / as sysdba SELECT process, status, thread#, sequence# FROM V$MANAGED_STANDBY; EXIT

This is a good glance at what your Data Guard processes are doing. If one of the ARCH processes has a STATUS of “WAITING”, look at the SEQUENCE#. Let’s say the SEQUENCE# is 12420. That means that the DUPLICATE we did earlier, only took us up through SEQUENCE# 12419, and the Primary instance has moved on since then. So, we need to restore from SEQUENCE# 12420 all the way up to whatever your last archive log backup holds. So, we make a note of SEQUENCE# 12420 and move on.

Using whatever method you like best, robocopy for instance, copy all of your archive log backups that have been taken since the DUPLICATE began to a location on your DR Site server, “OracleDR” (W:\Backups\TEXASDR, for instance). Next, we are going to catalog those into our Standby instance, using RMAN. We will then list all of the archive log backups that have been cataloged, to find which sequences they contain:

RMAN TARGET / CATALOG START WITH 'W:\Backups\TEXASDR\' NOPROMPT; LIST BACKUP OF ARCHIVELOG ALL; EXIT

Once this completes, you will have seen all of your archive log backups scrolled on the screen, near the end you should find the SEQUENCE# that is the greatest. The output will look something like this:

BS Key Size Device Type Elapsed Time Completion Time

------- ---------- ----------- ------------ ---------------

12095 45.50K DISK 00:00:00 31-JAN-12

BP Key: 12125 Status: AVAILABLE Compressed: YES Tag: TAG20120131T000

414

Piece Name: W:\BACKUPS\TEXASDR\AL_T12345466_S85246_P1

List of Archived Logs in backup set 12095

Thrd Seq Low SCN Low Time Next SCN Next Time

---- ------- ---------- --------- ---------- ---------

1 12516 1346096477 31-JAN-12 1121631224 31-JAN-12

Notice the Seq field, this is the SEQUENCE# we are looking for, 12516. So, referencing the SEQUENCE# we noted above (the one that ARCH is currently waiting on), we see that we need to restore all sequences from 12420 to 12516. So, we perform this RMAN command:

RMAN TARGET / RESTORE ARCHIVELOG SEQUENCE BETWEEN 12420 AND 12516; EXIT

Restoring and applying all of those logs may take a considerable amount of time, but we can check on the status by running this command:

SQLPLUS / as sysdba SELECT THREAD#, SEQUENCE#, FIRST_TIME, APPLIED, COMPLETION_TIME FROM V$ARCHIVED_LOG WHERE APPLIED = 'NO' ORDER BY SEQUENCE#; EXIT

This will show you how many sequences still need to be applied to the Standby instance. When the query returns no rows, we have applied all available (restored) sequences. At this point, depending on how long the application of all of those logs took, it may be necessary to copy any new backups and run through this whole process again. One way to avoid this would be to temporarily suspend archive log backups on your Primary, but that may or may not be acceptable in your environment.

Setup the Data Guard Broker

The concept of what is going on up to this point should be fairly clear. We are taking redo logs from our Primary and replaying them on our Standby. In this way, we are manually maintaining the Standby and keeping our two sites in sync (only up to the last archive log backup). This is certainly not how you want to run your Standby, you want an automated way to do this all the time, without human intervention, and, if possible, keeping up to the last completed transaction, not just the last backup.

This is where Data Guard Broker comes in. The broker is a simple process in Oracle that manages this entire process for you. It even makes switching over to the DR site and switching back easier. So, first, we need to turn the process on at both sites:

SQLPLUS / as sysdba ALTER SYSTEM SET DG_BROKER_START=TRUE SCOPE=BOTH; EXIT

Verify that the DMON process is running:

SQLPLUS / as sysdba SELECT PROGRAM FROM V$SESSION WHERE USERNAME IS NULL; EXIT

Now, the configuration (as well as all commands, when possible) should be ran from the Primary instance. So, connect to the Primary instance and issue the following to get into the Data Guard Broker Manager and verify that no configuration already exists:

CD O:\Oracle\11.2.0.2\TEXAS\BIN SET ORACLE_HOME=O:\Oracle\11.2.0.2\TEXAS SET ORACLE_SID=TEXAS DGMGRL CONNECT / SHOW CONFIGURATION

The Broker makes you name your configuration, even though, at this time, you can only have one configuration. Let’s create a configuration, referencing the database by its unique name and the connect identifier by whatever you setup in TNSNames (in my case TEXAS):

CREATE CONFIGURATION 'DATAGRD' AS PRIMARY DATABASE IS 'TEXASP' CONNECT IDENTIFIER IS TEXAS;

We now add our Standby to the configuration:

ADD DATABASE 'TEXASDR' AS CONNECT IDENTIFIER IS TEXASDR MAINTAINED AS PHYSICAL;

We can check the broker configuration of our databases by issuing:

SHOW DATABASE VERBOSE 'TEXASP'; SHOW DATABASE VERBOSE 'TEXASDR';

Now, we can enable our configuration to allow the Broker to start managing our Data Guard:

ENABLE CONFIGURATION;

Lastly, we need to configure how/when our logs are transferred and applied to the Standby. We have two settings we need to configure, LogXPTMODE and Protection mode.

LogXPTMODE has three options, ARCH, ASYNC, or SYNC.

- ARCH is similiar to SQL Log Shipping, it only sends a completed archive log, so, depending on how fast your instance runs through online logs, will be a large determining factor in how often your DR site has that data. Your DR site will almost always be behind by some margin.

- ASYNC is only used for Logical Standby instances

- SYNC is going to send each transaction to your Standby as soon as possible, after it has written it to the Primary online log. Your DR site will almost always be caught up to the last committed transaction.

In my opinion, if you have sufficient bandwidth, you should always choose SYNC.

Protection mode also has three options, MAXPROTECTION, MAXAVAILABILITY, or MAXPERFORMANCE.

- MAXPROTECTION will not close a transaction (commit) on the Primary side until the transaction has ALSO been written to the DR site. This will ensure that no transactions that are committed are lost on the Standby, even when an emergency failover occurs. This would most often be used in a financial application, where not a single transaction can be lost. The downside to this Protection mode is that any network blip or short-term disconnect will halt transactions from committing on the Primary instance, until the network connectivity is restored.

- MAXAVAILABILITY will return to the user as being committed as soon as the Primary instance has written the transaction to the online log and will then make a best-effort to get that transaction to the Standby as quickly as possible. This allows the Primary instance to be more resilient to short-term outages of connectivity to the Standby. The downside, however, is that if an emergency failover occurs before that transaction is sent to the Standby, a user could think that a transaction is hardened to the database, but see that it is actually missing at the DR site.

- MAXPERFORMANCE waits for the archive writer process to send the last archive log over to the Standby, this is very much like SQL Log Shipping. The upside is that no matter the state of the Standby instance, the Primary database continues along happily. The downside is that if a failover occurs, only the transactions contained in the last archive log that has been sent to the Standby will be preserved.

In my opinion, either MAXPROTECTION or MAXAVAILABILITY make sense for this setting. Due to the nature of my databases, I choose MAXAVAILABILITY.

So, we now configure these settings:

EDIT DATABASE 'TEXASP' SET PROPERTY LogXPTMODE='SYNC'; EDIT DATABASE 'TEXASDR' SET PROPERTY LogXPTMODE='SYNC'; EDIT CONFIGURATION SET PROTECTION MODE AS MAXAVAILABILITY;

And finally, we check our configuration to make sure there are no errors or warnings:

SHOW CONFIGURATION

The configuration should show “SUCCESS”, which means that logs are applying and the Data Guard is properly configured for switchover. If you encounter any errors, they will most likely be due to the Standby not having the most recent archive logs applied yet. Try syncing the Data Guard manually one last time. After you have it synced up, the broker should take over automatically.

Some Code You Should Know

In addition to the syntax stated above to let you check on how the Data Guard is working, there are two more important commands that you will use in DGMGRL. They are “SWITCHOVER” and “FAILOVER”. It would be worth while to read up on the entire DGMGRL process, but these two are critical, in that they handle switching your Production instance from running over at your Primary site to your DR site.

SWITCHOVER – this is a graceful switch from your Primary instance to your DR. It makes sure that you are all synced up from your Primary to your DR, kills open connections on your Primary instance, and switches the FAL roles so that your DR site is now the live Production and your Primary site is now the Standby. It will also switch you back, when the time comes.

FAILOVER – this is a much more drastic command. You would only use this when you are in a dire situation, such as, your Primary Site is gone, unavailable, or completely down in some way. This is going to ignore trying to reconcile any last transactions and open your DR site instance with a RESETLOGS. You will not be able to simply switch back over to primary. Your best course of action after your Primary site is available again, will most likely be to blow away your old Primary instance and setup a new Data Guard from your DR site back to your Primary site and THEN perform a switchover to get the instance back to the primary. But, it will be a new incarnation of your instance.

I hope this has helped to get you running with Data Guard. I do not guarantee that my scripts are perfect, but I have tried to triple-check them as much as possible.

Perform DBCC CheckDB Weekly using Red-Gate SQL Virtual Restore

There are many articles on the Internet explaining why it is important to check the consistency of all of your databases on a regular basis. If you are not made aware of corruption in your system as soon as possible, it is likely that you could miss a growing problem until you are in a crisis. However, even though we know consistency checking is important, it can be hard to find a time when you can afford the large performance hit upon your production machines, due to the resource intensity of CheckDB.

So, rather than run CheckDB on my production boxes, I made the choice to take the latest full backup and restore it to a test server, and run CheckDB against that every week. While this does not avoid the possibility of corruption happening since the last full backup, it does let me know that, at the very least, my last full backup is safe. I also get the added built-in benefit of testing my restore strategy each week.

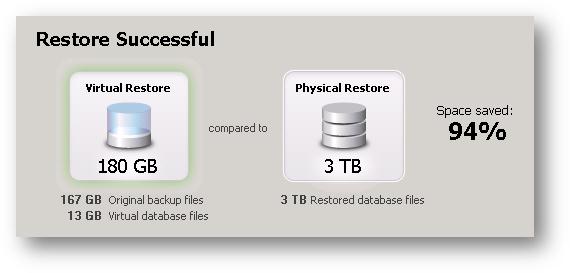

However, this poses other problems. Some of my databases are quite large, and doing a full restore takes a considerable amount of time and disk space. This is where Red-Gate comes in.

Red-Gate recently added a tool to their arsenal called SQL Virtual Restore. This tool allows the DBA to use Red-Gate’s HyperBac Filter Driver to allow you to restore a virtual copy of a database using far less space, spending less time, and all utilizing native SQL Backup and Restore syntax. SQL Virtual Restore references the backups you supply to it, creates custom “indexes” on your local disk (to help Red-Gate transcend your backups quickly), and creates small empty structures for your files (virtual database files). This allows you to have an unaltered database backup serve your reads and the virtual database files serve your writes. The savings are truly amazing:

With this tool at hand, I next needed to get my scripts written.

With this tool at hand, I next needed to get my scripts written.

I already have a harness for this sort of maintenance – I have an instance dedicated to DBA tasks. Running in this instance, are different jobs that perform tasks at different intervals; every 15 minutes, every hour, day, week, so forth. These jobs fire off SSIS packages which collect information about my instances in my environment, such as which backups ran successfully in the last week, what security changes have occurred, which databases have datafiles which are going to grow soon, which indexes need attention, which jobs are running longer than usual, and so forth; all based off of a list of instances that I update whenever I add or modify a SQL instance to my environment.

So, since I already have all of the information I will need to write my restore and CheckDB scripts, I am all set. I created a stored procedure that would:

- Loop through my list of Instances

- For each instance, loop through each database

- For each database:

- Gather the list of the backup files from the latest successful full backup

- Verify that the most recent backup is not “too old” (comparing against a configurable value)

- Gather all datafile logical names (as of the time the backup was taken)

- Copy those backup files locally

- Create a native syntax restore script, using dynamic SQL, from the list of backup files and specifying a local location for each virtual data file (one VDF for each logical file in the backup)

- Attempt to execute the dynamic SQL restore script, noting any errors that might occur

- If the restore was successful, execute DBCC CheckDB against the newly restored database, saving all results to a table

- Once the DBCC CheckDB has been performed, I check the results in the table to discover if there were any problems found, noting the result

- I then drop the Virtual Database and the local copy of the backups

- Once every database in the instance has been checked, the script then e-mails me with the results, each database is listed, along with whether the check was successful, found issues that I need to look into, if the latest backup was too old, or if the restore failed (along with any errors)

Using this method, I am able to consistency check all of my databases every week without ever having to lift a finger. I look over the e-mails I get from the script, for each Instance, and make sure everything was successful. If an error ever pops up, I know that I only have to spend my time on the databases that are actually having problems.

This has been a huge time-saver for me and has given me additional peace of mind.

Moving a Filegroup to a New Disk Array with No Downtime

We just bought a new SAN and I am in the midst of migrating our SQL Database Instances to it. One of the challenges I am facing is that I have several very large tables (billions of rows) and some of them contain LOB data. So, simply dropping the non-clustered indexes and then recreating the clustered index online is not an option, and, of course, all of the systems have to remain online during the migration. I certainly cannot get a downtime long enough (hours) to move some of my largest tables. So, the challenge I’ve been facing, is how to move these tables with no (or at least minimal) downtime, and preferably with very little impact to production performance.

Well, I have one thing really going for me – I already have my filegroups setup exactly the way I want them. My partitions are stretched across all the right filegroups, my tables are separated off into certain groups according to various logic on each database, and I’m not really looking to change anything like that; at least not with this migration. So, ultimately, I just need to figure out how to move each filegroup to the new array, without interrupting anything.

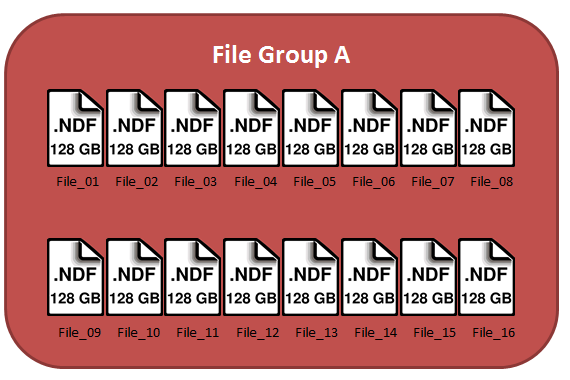

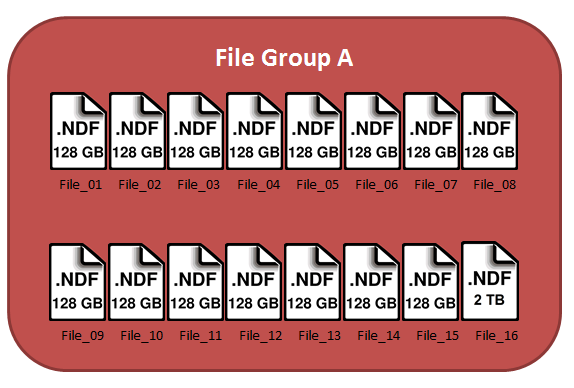

So, let’s look at one of my filegroups: it consists of 16 files, each of size 128GB, for a total size of 2TB. Like so:

Now, my original idea was to create 16 new files in the same filegroup but on the new array and then empty and remove the old files one by one, until this filegroup was completely on the new SAN. While, this would technically work, it causes a huge problem that I learned about from one of Paul Randal’s (Blog | Twitter) lessons during the SQLskills Immersion Event 1: Internals and Performance. Paul also discusses the problem in a SQL Server Pro article from August 2011. Basically, the problem is that due to the “proportional fill” method of writing to datafiles in a filegroup, you will cause your files to be filled unevenly, resulting in a hot-spot at whichever file(s) have the most freespace at the end of your process.

Now, my original idea was to create 16 new files in the same filegroup but on the new array and then empty and remove the old files one by one, until this filegroup was completely on the new SAN. While, this would technically work, it causes a huge problem that I learned about from one of Paul Randal’s (Blog | Twitter) lessons during the SQLskills Immersion Event 1: Internals and Performance. Paul also discusses the problem in a SQL Server Pro article from August 2011. Basically, the problem is that due to the “proportional fill” method of writing to datafiles in a filegroup, you will cause your files to be filled unevenly, resulting in a hot-spot at whichever file(s) have the most freespace at the end of your process.

So, to avoid this in my environment, I decided to first, grow one of the files in my filegroup to a large enough size to hold all of the data in that filegroup. So, assuming that each of my files in this filegroup are almost full, I will grow File_16 to 2 TB.

Now, my plan is to empty and remove each file in the filegroup, except File_16. This will cause all of the data to be moved down to this one file. The actual emptying of each file has the potential to be very intensive, however, I have found minimal effects on my servers when doing this. This process can take a very long time, but since I have to move these datafiles online, this is the best way I have found in this sort of scenario. Test this first in a non-production environment. I would write and issue a script similar to this:

Now, my plan is to empty and remove each file in the filegroup, except File_16. This will cause all of the data to be moved down to this one file. The actual emptying of each file has the potential to be very intensive, however, I have found minimal effects on my servers when doing this. This process can take a very long time, but since I have to move these datafiles online, this is the best way I have found in this sort of scenario. Test this first in a non-production environment. I would write and issue a script similar to this:

DBCC SHRINKFILE(File_01, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_01] DBCC SHRINKFILE(File_02, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_02] DBCC SHRINKFILE(File_03, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_03] DBCC SHRINKFILE(File_04, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_04] DBCC SHRINKFILE(File_05, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_05] DBCC SHRINKFILE(File_06, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_06] DBCC SHRINKFILE(File_07, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_07] DBCC SHRINKFILE(File_08, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_08] DBCC SHRINKFILE(File_09, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_09] DBCC SHRINKFILE(File_10, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_10] DBCC SHRINKFILE(File_11, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_11] DBCC SHRINKFILE(File_12, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_12] DBCC SHRINKFILE(File_13, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_13] DBCC SHRINKFILE(File_14, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_14] DBCC SHRINKFILE(File_15, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_15] |

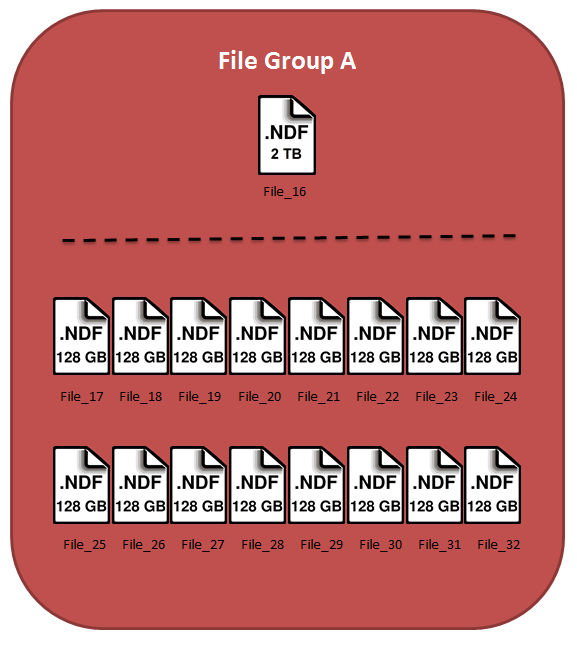

At this point, I add the 16 new files onto the new SAN, and we end up with one large file on the old SAN and 16 new files on the new SAN:

Now, all there is left to do is issue one more empty command to stripe that 2TB of data amongst the 16 new files.

Now, all there is left to do is issue one more empty command to stripe that 2TB of data amongst the 16 new files.

DBCC SHRINKFILE(File_16, EMPTYFILE) ALTER DATABASE [DatabaseName] REMOVE FILE [File_16] |

After this command has finished, the data will be nearly perfectly striped across all 16 files, on the new SAN. And this was accomplished with no downtime and, from my experience, very little impact to production performance. Your particular environment may see more performance impact, so please test in a non-production environment before trying this with a live system.

Understanding SQL Server Licensing for the Passive Instance

As anyone who has ever worked with any Microsoft Enterprise-Class software can tell you, Microsoft Licensing can be complicated. Add in Software Assurance, Enterprise Agreements, Multiplexing, Virtualization, and HA Technologies and it gets even worse. Sometimes you can even get experts from Microsoft that cannot clear it up for you. I have found that the best way to address Microsoft Licensing is on a case-by-case basis. However, there are a few cases that are both broad enough and common enough, as to warrant some decent documentation. I believe my recent experience qualifies as one of those cases.

We have been planning to add a new node to our primary SQL cluster. This particular cluster has grown over the last few years at a fairly steady clip. It started out as an Active-Passive Cluster, covered by a single Enterprise Per-Proc license. As time went on, I realized that we needed to start splitting some of that load onto two nodes, so that would mean bringing that Passive node into active duty, which, of course, would require the purchase of an additional Enterprise Per-Proc license. No big deal, we had budgeted for it and we were ready to go in no time. Shortly after that, I added a single node at our DR site, which was the SQL Mirroring Failover Partner for the cluster. This provided the hardware redundancy we wanted at the primary site (because a single node could handle the load of the entire cluster, if needed) and the logical redundancy we needed at the DR site. All was well, and everything was legal.

Well, now it is time for a third node. We still have enough horsepower and RAM to support all of the instances on the two nodes for quite a while, but the database requirements have grown enough that I wouldn’t feel comfortable if the entire workload had to perform on a single node, should the other node fail. So, I need to add a passive node. Additionally, this would require turning that one DR node into a two-node cluster at the DR site. This is where I had a lesson to learn.

Due to several documents I had read, from Microsoft (including the 2008 SQL Licensing Overview document), I had come to the conclusion that the passive node in an Active/Active/Passive cluster did not require its own license (which is true) and that a passive mirror did not require its own license (which is also true). However, I had misunderstood the actual meaning of the PUR‘s statement, “”. It turns out that each licensed node can only ever cover a single passive node (either a cluster node or a mirror, not both). This is quite clearly delineated in the Microsoft Software License Terms for Microsoft SQL Server 2008 R2 Enterprise, Section 4, paragraph e, which states:

Fail-over Server. For any operating system environment in which you run instances of the server software, you may run up to the same number of passive fail-over instances in a separate operating system environment for temporary support. If you have licensed the server software under the Per Processor licensing model, the number of processors used in that separate operating system environment must not exceed the number of processors used in the corresponding operating system environment in which the active instances are running. You may run the passive fail-over instances on a server other than the licensed server.

Meaning, If you have a total of only two Enterprise Per-Proc licenses, you cannot have more than two total servers acting as some form of failover against those licenses.

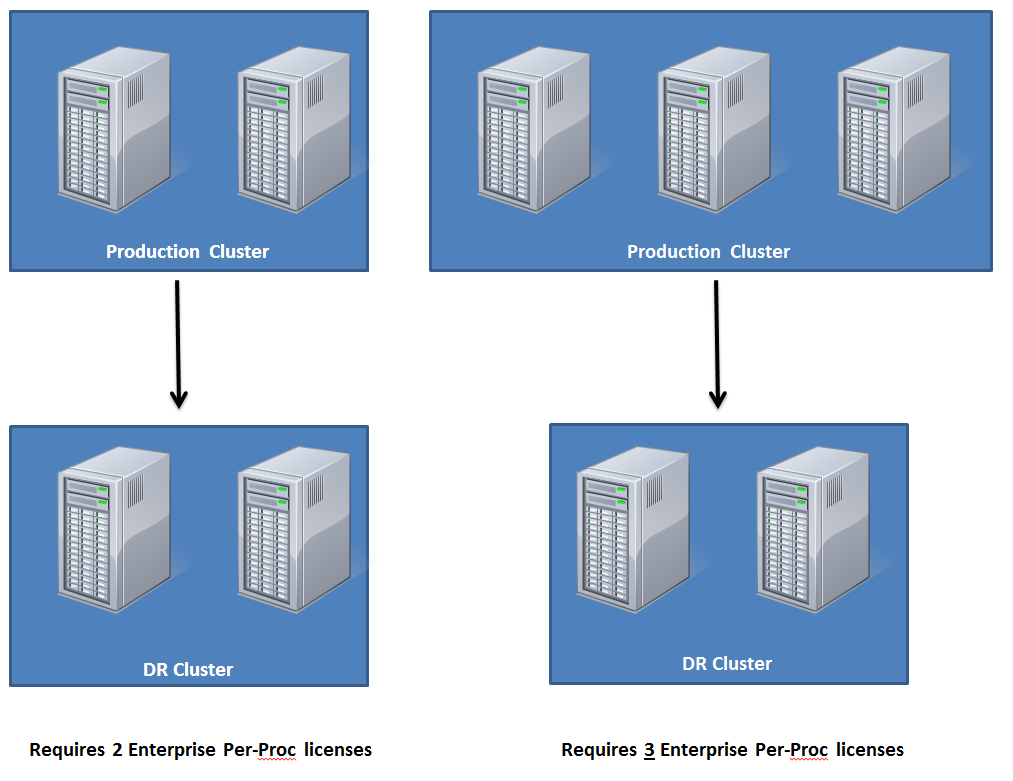

So, to help visualize this:

My solution was to go ahead and bite the bullet on buying the third license and using my production cluster in an Active/Active/Active setup, in which any two of the nodes could support the load of all three. In some shops, however, this may be a reason to hold off buying another node for your cluster, depending, of course, on your specific needs.

Monitoring Active Directory Groups for Windows Authentication in SQL Server Using PowerShell

According to Microsoft’s Best Practices, specifically, their SQL Server 2005 Security Best Practices Document, Windows Authentication is the preferred method of authentication in the SQL Server world. They state that SQL Authentication should only be used, when possible, for legacy, third-party, or non-Windows applications whenever possible. Personally, this is good news, our environment is well-managed with groups (as opposed to assigning rights directly to users) and our system administrators are completely on board. So, as older legacy applications have been rolling off to archive, I have made a concerted effort to push for only using Windows Authentication wherever possible. This has been a great success and almost all of my SQL logins are gone, but taking the authentication out of the sole hands of the DBAs can cause other problems.

On more than one occasion, a user has requested access to some system of a low-level helpdesk person. The helpdesk person, meaning well, but not aware of the implications, has placed said user in one of my “DB Only” groups in my “Database Groups” OU in Active Directory, thus granting them some level of access to a database. While this is not inherently bad, it certainly can lead to a user having access to a database which they should not, for compliance reasons, or otherwise. Usually, your system administrators have greater access to Active Directory than you, because it is, after all, their system, you’re just using it. And even if you have trained your systems guys well, someone can malevolently alter access without your knowledge. So what can be done about this?

In my environment, I took several actions to help give me better control and visibility into my Windows Authentication groups. First, long ago, I had my systems administrators create an OU that was all mine. My DBA group had full delegated rights to it and we could create OUs under it to help us keep things organized, create groups, and assign users to said groups. This has helped in my migration away from SQL logins and toward Windows Authentication, without having to bother them every time I need a new group for some set of permissions. It also created a logical divide that I could point to for those helpdesk guys who might accidentally grant rights to users.

Recently, however, I wanted to make sure I had a good audit of to whom which rights were being assigned. I have also been trying to dive into PowerShell, so I thought this would be a good opportunity to stretch a little and figure out how to get this done. I am not going to delve too much into PowerShell syntax or anything, there are tons of blogs out there that are way, way better than me at PowerShell, I am just learning it now. If you’re just getting started in PowerShell, may I recommend Sean McCown’s videos on the subject. They are a great place to start getting your feet wet. Here is a link to the first in the series.

I knew I would need four basic components to this mini-project:

- A Powershell script to query Active Directory for all groups and group members in my DB OU

- A Task or SSIS Package to pull the output of the script into a holding table

- A sproc to compare the newly captured data with the last known state of data to find the differences and then overwrite the existing data with the newly captured

- Generate an e-mail to notify the DBA team of any differences found in the previous step

I didn’t have any concern with steps 2, 3, or 4, I had done that sort of thing hundreds of times, the Powershell script was the interesting part, so I will focus on that piece for now. I may address the rest in another post later, if anyone is interested.

Now, I am sure there are dozens of scripts that do exactly what I need spread all over the Internet, but I wanted to learn how to do this, so the end product may be a bit unpolished, but here we go.

From my limited knowledge of PowerShell and my software developer background, I knew I would have to load a module to hit Active Directory. So, I started Googling for what that particular module would be called, easily enough it came up right away as “ActiveDirectory”, simple enough. So, I opened PowerShell:

Hmmm, no dice. I thought it must be a Role missing on my machine, so I went to Server Manager and looked through the Roles. The closest thing was “Active Directory Domain Services” which would certainly do it, I’m sure, but I think that would also do a dcpromo, which I definitely do NOT want to do. I don’t want my systems guys getting pissed at me for having made my test server a DC on the domain. So, I called my systems guy, who knows a little PowerShell, and asked him if he knew of a way to only install a portion of the Role. He informed me that what I probably needed was “Remote Server Administration Tools” Feature. Well, that made sense, so I went ahead and installed that Feature (Warning: requires reboot). After my server came back up, I tried the Import-Module again:

Looks like it’s working, so I verified it:

Yep, it’s loaded.

Next, I wanted to browse to my DN (Distinguished Name, which is the long string that defines an object in an LDAP structure, like Active Directory, they usually look similar to CN=SomeGroup,OU=SomeOu,OU=SomeParentOU,DC=domainPortion,DC=domainPortion).

So, I switched my directory to AD and then to my specific DN:

Now we are at my OU within my domain’s Active Directory. So, I did some querying to try to find out what I could do here. Typing “gci” or “dir” get’s you a list of all child elements, and my guess would be that the child elements would be OUs and groups that I have created under my DB OU:

And that’s precisely what I got. OK, I thought, what if I do this recursively?

And hundreds of groups and OUs popped up on my screen. Great, this is exactly what I need for the first portion of my script. But, I don’t want the OUs, at least not for my immediate purposes. Ultimately, I don’t care if someone comes in and creates an OU in my DB OU, it’s not good organization, but it’s not going to directly affect security on my SQL Server. So, I want to ignore anything but groups:

Much better! So, I’m going to store that to a variable for later use. I’ll use the variable to export out my list of groups to a CSV as well as use it as the base for the next part: getting a list of all of the users/groups in each of my groups.

So, what method can I use to find out what users or groups are in a group? I have the DN of the group, can I switch to it like a directory and then just get the children of that location?

Well, yes, I can switch to that specific group, but no, I cannot simply get it’s children. There must be another way. So, when in doubt, ask the system itself:

I issued the command, “Get-Command -Module ActiveDirectory”, which tells the system to tell you which commands are available in the module. The fourth in the list talks about GroupMembers, so I wonder if there is a Get-ADGroupMember, scrolling down:

Yep, let’s give it a shot against one of the groups:

Great! It works! I get a whole list of each user or group in that group. This is exactly what I need for the second portion of my script, the relationship between groups and their members. Now I just need to collect all that data for each group in my variable and export the new collection to another CSV.

Well, there were hundreds of groups, so there are likely to be thousands of members collectively in those groups. So, I don’t want to append a CSV file with each write, so I am going to need to create some structure in memory to hold all of this data as I parse through it and then dump it all out at once to my file. So, to store this 2D data, I would like to have an array of some custom STRUCT.

For those who are unfamiliar with programming, you may have never created your own struct or class before, but it truly is very simple. Basically, to put it in database terms, I am creating a simple data structure, that could be loosely compared to a single row of data. I will then have an array of these structs, making the whole data structure not too dissimilar from a table, where each instance of the struct is another row, and each row contains several fields.

To create my struct I am going to use the add-type command which takes a string that contains the definition of my struct and it’s constructor. Don’t get too hung up on this part, I can go into deeper detail in another post, but for now, just know that this data type will have four fields and a single method that allows it to be loaded with data. The code to create the type looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | add-type @" public struct DBgroupMember { public string distinguishedName; public string name; public string samAccountName; public string group; public DBgroupMember(string DistinguishedName, string Name, string SamAccountName, string Group) { distinguishedName = DistinguishedName; name = Name; samAccountName = SamAccountName; group = Group; } } "@ |

Now we have our data type (named DBgroupMember) ready to be used to store each group member’s DN, name, username, and the group to which they belong. We just need an array into which we are going to put all of these individual instances.

1 | $DBgroupMembers = @() |

Easy enough. Now, remember that variable in which we stored the list of groups, now we need to iterate through each group in that list and get that group’s members, store them in a new DBgroupMember object, and place that object in our array, the code for that looks like (including the declaration and assignment of the variable that holds the list of groups):

1 2 3 4 5 6 7 8 9 10 | $DBgroupList = gci -Recurse | ? {($_.ObjectClass -like "group")} foreach ($DBgroup in $DBgroupList) { $thisDBGroupMembers = Get-ADGroupMember $DBgroup foreach ($thisDBGroupMember in $thisDBGroupMembers) { $DBgroupMember = new-object DBgroupMember ($thisDBGroupMember.distinguishedName, $thisDBGroupMember.name, $thisDBGroupMember.SamAccountName, $DBgroup) $DBgroupMembers += $DBgroupMember } } |

And now we have two data structures in memory, one ($DBgroupList) which holds a list of all of the groups we care about, and the other ($DBgroupMembers) which holds a list of all of the members of all of the groups. Now we just need to write those out to their files:

1 2 | $DBgroupList | Select-Object Name, DistinguishedName | Export-Csv O:\Scripts\PowerShell\ActiveDirectory_AllDatabaseGroups.csv -notype $DBgroupMembers | Select-Object distinguishedName, name, samAccountName, group | Export-Csv O:\Scripts\PowerShell\ActiveDirectory_AllGroupMembers.csv -notype |

In each of these commands, we are taking our variable, selecting out the fields we care about, and using the Export-Csv command to some local disk location, specifying “notype”, which removes some file markup that would only make our job of importing the CSV into SQL harder later. You can read more about the Export-Csv command here.

Let’s put it all into one script, just to see it all in one glance:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | Import-Module ActiveDirectory cd AD: cd "OU=YOUR_OU,OU=ANOTHER_OU_MAYBE,DC=PART_OF_YOUR_DOMAIN_NAME,DC=ANOTHER_PART" add-type @" public struct DBgroupMember { public string distinguishedName; public string name; public string samAccountName; public string group; public DBgroupMember(string DistinguishedName, string Name, string SamAccountName, string Group) { distinguishedName = DistinguishedName; name = Name; samAccountName = SamAccountName; group = Group; } } "@ $DBgroupMembers = @() $DBgroupList = gci -Recurse | ? {($_.ObjectClass -like "group")} foreach ($DBgroup in $DBgroupList) { $thisDBGroupMembers = Get-ADGroupMember $DBgroup foreach ($thisDBGroupMember in $thisDBGroupMembers) { $DBgroupMember = new-object DBgroupMember ($thisDBGroupMember.distinguishedName, $thisDBGroupMember.name, $thisDBGroupMember.SamAccountName, $DBgroup) $DBgroupMembers += $DBgroupMember } } $DBgroupList | Select-Object Name, DistinguishedName | Export-Csv O:\Scripts\PowerShell\ActiveDirectory_AllDatabaseGroups.csv -notype $DBgroupMembers | Select-Object distinguishedName, name, samAccountName, group | Export-Csv O:\Scripts\PowerShell\ActiveDirectory_AllGroupMembers.csv -notype |

I think that does it for this particular post. If there is interest, I will go ahead and do another post about the rest of the process, but I hope this has given you some insight into how to browse Active Directory from within PowerShell.

Security Concerns Due to Ownership Chains

I learned something at the NTSSUG meeting tonight, via our guest speaker, Bryan Smith (MSDN Profile), and I wanted to share it with any developers who may read this.

I was under the impression that granting a user rights, such as “execute”, to a specific Stored Procedure does not grant any rights to the objects referenced by that Stored Procedure, and this is completely true. However, I understood that to mean that a user who has “execute” on a Stored Procedure, but does not have “update” rights to one of the tables that Stored Procedure writes to, would therefore be unable to execute that Stored Procedure successfully, due to missing those underlying rights. This second conclusion, however is actually a big “it depends” and for some small database shops, it is flat-out wrong. The deciding factor has to do with Ownership Chains.

If the Ownership Chain is unbroken between the originating Stored Procedure and the objects that it references, that is to say, all of these objects have the same owner as the Stored Procedure, then the Query Execution engine does not do further rights-checks against each of the called objects. Since, in many environments, most objects are owned by “dbo”, granting “execute” on a Stored Procedure to a specific login means, quite literally, that the user in question can successfully execute that Stored Procedure, regardless of their rights on those objects.

This, obviously, means that you have to be much more judicious in assigning the “execute” permission to users and also that you should consider purposefully breaking Ownership Chains in some scenarios. However, don’t drop everything and start making changes; you wouldn’t want to break all of the possible existing code that was previously taking advantage of this. But, as you go forward, and especially as new Stored Procedures are created, be very stringent on the assigning of rights. Above all, be aware of how this affects any T-SQL code that you write.