There are many articles on the Internet explaining why it is important to check the consistency of all of your databases on a regular basis. If you are not made aware of corruption in your system as soon as possible, it is likely that you could miss a growing problem until you are in a crisis. However, even though we know consistency checking is important, it can be hard to find a time when you can afford the large performance hit upon your production machines, due to the resource intensity of CheckDB.

So, rather than run CheckDB on my production boxes, I made the choice to take the latest full backup and restore it to a test server, and run CheckDB against that every week. While this does not avoid the possibility of corruption happening since the last full backup, it does let me know that, at the very least, my last full backup is safe. I also get the added built-in benefit of testing my restore strategy each week.

However, this poses other problems. Some of my databases are quite large, and doing a full restore takes a considerable amount of time and disk space. This is where Red-Gate comes in.

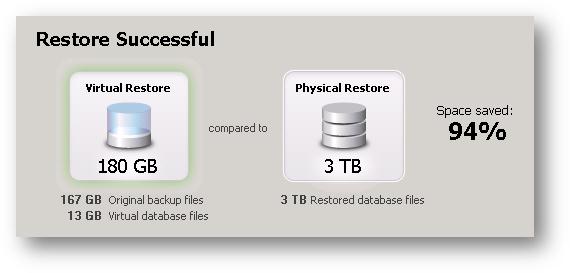

Red-Gate recently added a tool to their arsenal called SQL Virtual Restore. This tool allows the DBA to use Red-Gate’s HyperBac Filter Driver to allow you to restore a virtual copy of a database using far less space, spending less time, and all utilizing native SQL Backup and Restore syntax. SQL Virtual Restore references the backups you supply to it, creates custom “indexes” on your local disk (to help Red-Gate transcend your backups quickly), and creates small empty structures for your files (virtual database files). This allows you to have an unaltered database backup serve your reads and the virtual database files serve your writes. The savings are truly amazing:

With this tool at hand, I next needed to get my scripts written.

With this tool at hand, I next needed to get my scripts written.

I already have a harness for this sort of maintenance – I have an instance dedicated to DBA tasks. Running in this instance, are different jobs that perform tasks at different intervals; every 15 minutes, every hour, day, week, so forth. These jobs fire off SSIS packages which collect information about my instances in my environment, such as which backups ran successfully in the last week, what security changes have occurred, which databases have datafiles which are going to grow soon, which indexes need attention, which jobs are running longer than usual, and so forth; all based off of a list of instances that I update whenever I add or modify a SQL instance to my environment.

So, since I already have all of the information I will need to write my restore and CheckDB scripts, I am all set. I created a stored procedure that would:

- Loop through my list of Instances

- For each instance, loop through each database

- For each database:

- Gather the list of the backup files from the latest successful full backup

- Verify that the most recent backup is not “too old” (comparing against a configurable value)

- Gather all datafile logical names (as of the time the backup was taken)

- Copy those backup files locally

- Create a native syntax restore script, using dynamic SQL, from the list of backup files and specifying a local location for each virtual data file (one VDF for each logical file in the backup)

- Attempt to execute the dynamic SQL restore script, noting any errors that might occur

- If the restore was successful, execute DBCC CheckDB against the newly restored database, saving all results to a table

- Once the DBCC CheckDB has been performed, I check the results in the table to discover if there were any problems found, noting the result

- I then drop the Virtual Database and the local copy of the backups

- Once every database in the instance has been checked, the script then e-mails me with the results, each database is listed, along with whether the check was successful, found issues that I need to look into, if the latest backup was too old, or if the restore failed (along with any errors)

Using this method, I am able to consistency check all of my databases every week without ever having to lift a finger. I look over the e-mails I get from the script, for each Instance, and make sure everything was successful. If an error ever pops up, I know that I only have to spend my time on the databases that are actually having problems.

This has been a huge time-saver for me and has given me additional peace of mind.

Excellent write-up on how to use Virtual Restore. Well done.

This is exactly what I am looking to do, so thanks for the great article. Is there a script link that I can peek at to see how you are doing this? thank you.

Good question, let me put something together and post it.

I apologize for the delay, https://www.sqlphilosopher.com/wp/2013/01/my-red-gate-hyperbac-dbcc-checkdb-script/

Have you had a chance to “put something together and post it?” I’ve attempted to tackle this, with some success, and would be great to see how someone else has handled: http://www.bobmihada.com/2011/10/darkmagic-with-redgate-virtual-restore.html

Thank you for following-up. I have not done this yet. Let me get something together today. I’ll reply to this comment and let you know.

Have you scripted this out? Can we see it? I scripted out the regular restore as a “virtual” restore because it removes it when it is done but I am trying to figure out how they are different in a script. From their script it looks like a straight restore, how does it know that it is virtual? Thanks.

That’s really a nice article on how to run consistency checks across virtual db. I am actually trying to implement this in our data warehouse prod server for an 8 TB DB. I am looking out for a sql script so that I can automate this whole process of restoring & running dbcc checks on them. I would really appreciate if you could share the scripts you’ve implemented in your environment.

I apologize for the delay, https://www.sqlphilosopher.com/wp/2013/01/my-red-gate-hyperbac-dbcc-checkdb-script/